Quantum computing stands at the frontier of computational science, promising to revolutionize fields ranging from cryptography to drug discovery. This article examines the current state of quantum computing research, recent breakthroughs, and the challenges that remain on the path to practical quantum advantage.

Fundamental Principles

Before diving into recent developments, it's worth briefly reviewing the fundamental principles that distinguish quantum computing from classical computing:

- Quantum Bits (Qubits): Unlike classical bits that can be either 0 or 1, qubits can exist in a superposition of both states simultaneously, enabling quantum computers to process vast amounts of information in parallel.

- Entanglement: Quantum entanglement allows qubits to be correlated in ways that have no classical analog, creating computational resources that can be harnessed for specific algorithms.

- Quantum Interference: Quantum algorithms manipulate probability amplitudes to enhance correct answers and suppress incorrect ones through interference effects.

These properties enable quantum computers to potentially solve certain problems exponentially faster than classical computers, though this advantage applies only to specific types of problems.

Current Hardware Approaches

Several competing approaches to building quantum computers have emerged, each with distinct advantages and challenges:

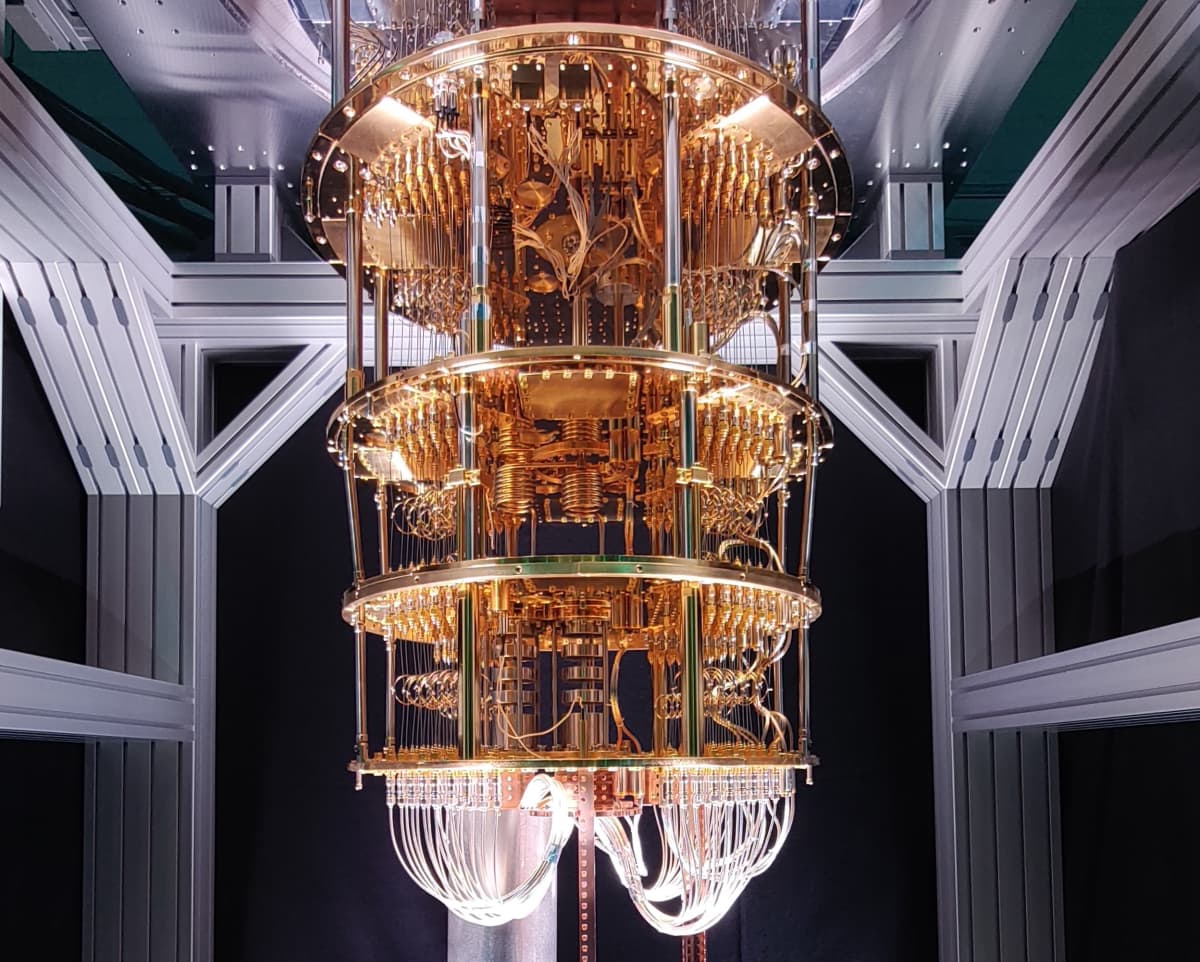

Superconducting Qubits

Superconducting circuits remain the most mature quantum computing technology, used by companies like IBM, Google, and Rigetti. Recent advances include:

- Improved coherence times, now reaching beyond 300 microseconds in leading systems

- Reduced error rates through better materials and fabrication techniques

- Scaling to processors with over 1000 physical qubits, though not all are fully connected

- Implementation of mid-circuit measurements and dynamic circuit execution

IBM's latest roadmap aims for a 4,158-qubit system by 2025, with a focus on modular quantum processors connected through quantum communication links.

Trapped Ions

Trapped ion quantum computers, developed by companies like IonQ and Quantinuum, offer exceptional qubit quality at the cost of slower gate operations:

- Record-setting fidelities for two-qubit gates exceeding 99.9%

- Long coherence times measured in seconds rather than microseconds

- All-to-all connectivity that simplifies algorithm implementation

- Recent demonstrations of systems with 32 fully connected qubits

The challenge for trapped ion systems remains increasing operation speed and scaling to larger qubit counts while maintaining their exceptional fidelity.

Photonic Quantum Computing

Photonic approaches use light particles as qubits and have seen significant recent progress:

- Demonstrations of fault-tolerant operations using measurement-based quantum computing

- Development of integrated photonic circuits that reduce size and improve stability

- Novel approaches using squeezed light states to achieve computational advantage

- Room-temperature operation that eliminates the need for cryogenic cooling

Companies like Xanadu and PsiQuantum are pursuing photonic approaches, with PsiQuantum aiming to build a million-qubit fault-tolerant system through photonic integration and advanced manufacturing techniques.

Neutral Atoms

Neutral atom quantum computers arrange atoms in arrays using optical tweezers and manipulate them with lasers:

- Demonstrations of systems with over 200 programmable qubits in two-dimensional arrays

- Implementation of high-fidelity multi-qubit gates using Rydberg interactions

- Reconfigurable qubit connectivity that can be optimized for specific algorithms

- Potential for scaling to thousands of qubits while maintaining coherence

Companies like QuEra and Atom Computing are advancing this approach, with QuEra already providing access to a 256-atom system through cloud services.

Quantum Error Correction and Fault Tolerance

Perhaps the most significant recent advances have come in quantum error correction (QEC), which is essential for building large-scale, fault-tolerant quantum computers:

Surface Codes and Beyond

Surface codes remain the most widely studied QEC approach, with recent developments including:

- First demonstrations of logical qubits with error rates lower than their physical constituents

- Implementation of error-corrected operations between logical qubits

- Optimization of code parameters to reduce resource requirements

- Development of decoders that approach theoretical performance limits

Beyond surface codes, researchers are exploring alternative approaches like color codes, Floquet codes, and subsystem codes that may offer advantages in specific hardware implementations.

Logical Operations

Recent experiments have demonstrated key milestones in logical operations:

- Implementation of non-Clifford gates (particularly T gates) on logical qubits

- Demonstration of fault-tolerant state preparation and measurement

- First implementations of small fault-tolerant quantum circuits

- Verification of error suppression scaling with code distance

These achievements represent crucial steps toward scalable quantum computation, though full fault tolerance still requires significant improvements in physical qubit quality and control systems.

Quantum Algorithms and Applications

As hardware capabilities advance, research into quantum algorithms and applications continues to expand:

Quantum Chemistry and Materials Science

Quantum simulation remains one of the most promising near-term applications:

- Improved algorithms for electronic structure calculations with reduced circuit depth

- Demonstrations of variational algorithms for small molecules on current hardware

- Development of error mitigation techniques specific to chemistry applications

- Integration with classical computational chemistry workflows

Recent collaborations between quantum computing companies and pharmaceutical firms aim to apply these advances to drug discovery and materials development.

Optimization and Machine Learning

Quantum approaches to optimization and machine learning continue to evolve:

- Refinement of quantum approximate optimization algorithms (QAOA) for specific problem classes

- Development of quantum neural network architectures with provable advantages

- Hybrid quantum-classical approaches that leverage the strengths of both paradigms

- Demonstrations of quantum machine learning for specific data analysis tasks

While quantum advantage for these applications remains elusive, theoretical work has identified specific regimes where quantum approaches may outperform classical methods.

Cryptography and Security

The potential impact of quantum computing on cryptography continues to drive research:

- Refinement of Shor's algorithm implementations to reduce resource requirements

- Development of quantum-resistant cryptographic standards (post-quantum cryptography)

- Advances in quantum key distribution and quantum-secure communications

- Exploration of quantum-enhanced security protocols

The transition to post-quantum cryptography has accelerated, with NIST finalizing its first standards for quantum-resistant algorithms in 2022 and continuing to evaluate additional candidates.

Quantum Software and Development Tools

The quantum software ecosystem has matured significantly:

- Standardization of quantum programming languages and intermediate representations

- Development of hardware-aware compilation techniques that optimize for specific quantum processors

- Creation of debugging and visualization tools for quantum algorithms

- Integration of quantum simulators with high-performance computing resources

Open-source frameworks like Qiskit, Cirq, and PennyLane have expanded their capabilities, while cloud access to quantum hardware has become more standardized and accessible.

Challenges and Outlook

Despite significant progress, several challenges remain on the path to practical quantum advantage:

Scaling with Quality

The fundamental challenge remains scaling qubit counts while maintaining or improving qubit quality. Current systems face a trade-off between size and performance that must be overcome to achieve fault tolerance.

System Integration

Building practical quantum computers requires integrating quantum processing units with classical control systems, memory, and networking components. This integration presents significant engineering challenges, particularly for cryogenic systems.

Algorithm Development

While quantum algorithms with theoretical advantages exist for specific problems, developing practical implementations that account for hardware constraints and error characteristics remains challenging.

Benchmarking and Verification

As quantum systems grow more complex, verifying their operation and benchmarking their performance becomes increasingly difficult, requiring new approaches to validation and testing.

Conclusion

Quantum computing has progressed from theoretical possibility to engineering reality, with multiple hardware approaches demonstrating increasing capabilities. The field has moved beyond proof-of-concept demonstrations to addressing the practical challenges of building useful quantum computers.

While a general-purpose, fault-tolerant quantum computer remains years away, specialized quantum processors may deliver advantages for specific applications in the nearer term. The next few years will likely see continued progress in error correction, algorithm development, and system integration, bringing us closer to realizing the transformative potential of quantum computation.

As this technology continues to mature, interdisciplinary collaboration between physicists, computer scientists, engineers, and domain experts will be essential to translate quantum computing's theoretical promise into practical applications that address real-world challenges.